AI-moderated research is here to stay: Are you ready to let AI do your customer research?

AI-moderated research tools promise the depth of qualitative interviews at the speed and scale of a survey. But will these tools replace or supplement the work of human researchers?

Imagine if your market research could run on autopilot while you sip your morning coffee. Sounds like a dream? Well, AI-moderated research tools are making this a reality, offering insights at the speed of a Google search but with the depth of a heart-to-heart chat. But can these digital detectives truly replace the human touch? Let’s dig in.

How it works

Most AI-moderated research tools rely on GPT-powered chatbots to interview customers. They generally work in three steps (with visuals provided by Outset.ai serving as an illustrative example):

1. Design: Set the interview's objectives, customize the AI personality, customize questions with help from the tool, and provide contextual details about your company and audience to tailor the AI's guidance. Then distribute the interviews to participants directly or through panel providers.

2. Interview: An AI moderator conducts the interview, dynamically adjusting questions based on responses, with participants able to respond in multiple formats including text, audio, and video. Interviews are designed to be brief, typically 5 to 15 minutes. Some tools can also share images or videos to test new concepts, marketing work, or designs.

3. Analysis: Create reports on key themes, sentiment, and important quotes, and interact with the data using natural language queries for detailed analysis. Review audio or video clips for a more detailed perspective.

Curious to experience what it’s like to be interviewed by an AI? Click here to experience it for yourself using a custom GPT I set up. Or request a demo at Outset.ai and/or Tellet.ai.

Click here to be interviewed by an AI (requires a ChatGPT subscription)

What’s on offer

The AI-moderated research category is still quite new, with the first tools going live in 2023. Two players seem to be emerging ahead of the field; Outset.ai and Tellet.ai.

Outset was launched in 2022 in San Fransisco, attracting 4.9M in funding so far.

Tellet was launched in 2023 in Amsterdam, attracting 400k in funding so far.

Additionally, there are several others like Zenaix, Questly, and Pansophic. It will be interesting to see how these tools will develop. In terms of features, they seem to offer similar features and a similar vision.

Fit in the researcher’s toolbox

With their ability to research qualitatively at a quantitative scale, AI-moderated research tools bridge the gap between qualitative and quantitative research, offering unique advantages in both areas;

Qualitatively, they’re able to identify sentiments and themes and enable the extraction of meaningful quotes, stories, and insights, providing a rich, detailed understanding of the data's underlying themes.

Quantitatively, these tools use automated coding to count and categorize responses, turning qualitative feedback into measurable data that highlights key trends and the frequency of specific opinions.

Will AI replace human interviewers?

AI-moderated research tools offer several benefits over human-moderated interviews, but is it enough to make human interviewers redundant? Let’s dig in.

Quick results. The most time-intensive part of research is often collecting and analyzing the data. For example, the Nielsen-Norman Group reports it usually takes about 20.6 hours to collect data and another 16.4 hours to analyse the data needed for a customer journey. AI tools can significantly speed up this process, often promising results within a day.

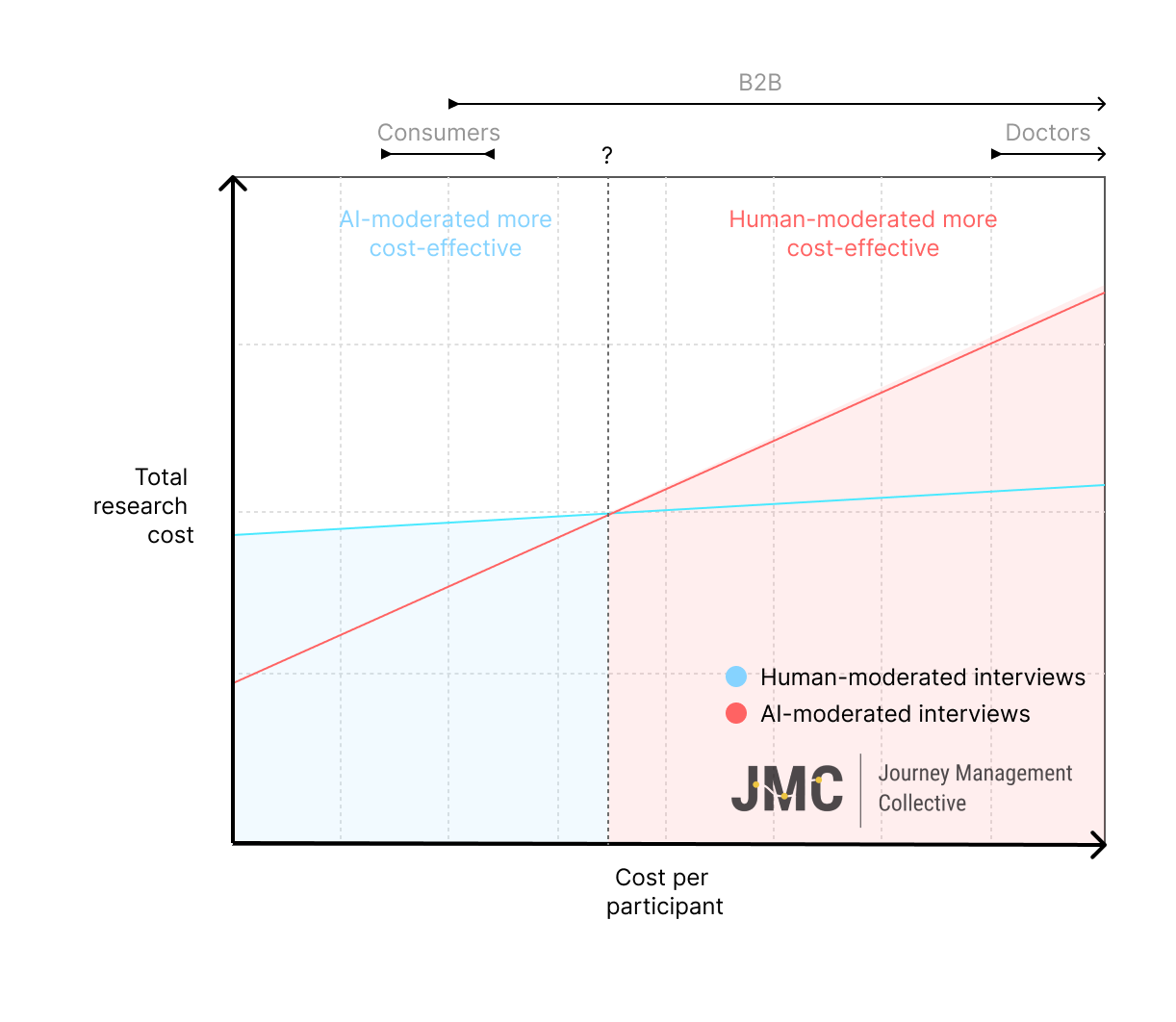

Lower cost (for certain groups). AI tools save time and simplify the logistics of research studies a great deal, all of which help save money. Since they rely on a larger number of respondents than human-led research methods, this is especially true for groups that are easier to recruit and incentivize. Think of everyday shoppers, students, or hobbyist groups. When it comes to groups that are more costly to find and incentivize, like medical staff, the costs for getting them to participate are often much higher - meaning there is a point above which human-moderated interviews become more cost-effective again.

Range of opinion. Effective customer research aims to reach 'insight saturation' – a point where additional interviews don't significantly add new insights, usually said to be around the point where you’ve uncovered 80% of new insights. Traditional methods typically achieve this with fewer, longer interviews, delving deeply into a limited audience. AI interviews may be shorter and less in-depth individually, but their strength lies in their ability to do them at scale. For research focusing on a single topic or user group (e.g. prototype tests), the rule of thumb is that you generally need 5 user interviews, whereas Tellet estimates this to be around 30 for their AI-moderated tool. For deep exploratory interviews with multiple groups, you often need between 8-15 human-led interviews, whereas AI-moderated tools often work with 100-200 research participants.

This allows for interviews with a larger, more diverse, and potentially more representative range of participants in any language. This approach potentially offers a more complete spectrum of insights.

Interview quality. Conducting interviews requires objectivity, effective pacing, and asking the right questions, skills that take practice to perfect. While AI may not (yet) equal the expertise of a seasoned researcher, it might already surpass less experienced ones. For example, they have infinite patience, the ability to probe deeper without fear of discomfort, and a lack of emotional attachment to feedback that might otherwise color the results. Bias in AI can still be an issue, but the same holds true for people. In using the GPT-4 interviewer and working with the AI team at TheyDo, I’ve found that AI can exhibit a machine-like precision in its questions and analysis. Furthermore, there is a push to customize AI-interviewer personalities to better match the target group to build rapport. And, while this is not realized yet, AI also has the potential to adapt the interview process in real time; continuously monitoring all interviews to decide what topics are saturated and where there are still gaps in knowledge to explore deeper to optimize the overall insight saturation.

Quantitative analysis. Since AI-moderated research works with larger numbers of participants, a moderate amount of quantitative analysis can be applied that would not be possible with traditional user interviews.

Ease of use (even for non-skilled researchers). Moderated AI platforms don’t require a lot of skill to use. They do the research and analysis by themselves, and soon they will also help to set up topic guides, pick the right audiences, and more. As such, these tools might open up decent-quality research to teams that would otherwise not use it at all.

Participant engagement. AI-moderated tools lower the barrier for participants to engage in research. Participants can engage at any time, in any language, in any format such as text, speech, or video. This makes it much more convenient to participate for people with busy schedules (such as B2B groups), people who haven’t mastered a language yet, people with low literacy, or those with speech- or visual impairments.

Why we still need human interviewers

While AI-moderated interviews promise many benefits, they cannot fully replace human interviewers for several reasons.

Necessity and/or lower cost. Some target groups are more challenging to recruit due to a combination of specific gender, age, profession, income, heritage, or other factors. For example, elderly people might not be digitally capable, disadvantaged groups might not have access to digital tools at all, and when it costs 500 euros in recruitment costs and incentives to interview a neurosurgeon, would you rely on an AI to do the interview?

When it costs 500 euros to recruit a neurosurgeon, would you rely on an AI to do the interview?

Interview quality. AI research tools cannot match the depth and empathetic accuracy of a seasoned human researcher. First of all, AI tools run into technical limitations, such as hallucination, forgetting details or not being able to stick to a script beyond a certain level of interview depth and length. People are also more likely to put in effort in their responses when there’s a real person there to listen. When the research topic centers around sensitive topics such as health or finance, people will often be more at ease to share their deepest experience with a real human to build trust, rapport and emotional connection. Furthermore, good interviewers can adapt to the conversation flow, employ interactive techniques like visual thinking or card sorting, and respond to non-verbal cues, which are all challenging for AI to emulate. And lastly, conducting interviews in participant’s homes, workplaces, or other relevant settings offers a deeper insight into their environment and daily life. It will be interesting to see if the rapid progression of AI will find a way to compensate for these current shortcomings.

Lived experience. No matter how good AI gets, it cannot replace the depth of real human connections and experiences gained through hands-on work. Doing old school research forces you to learn about a topic, to empathize with customers, and thus to become more attuned to your customer’s needs, pains, and gains. There is no substitute for seeing a participants struggle with a difficult subject, having a prototype applauded or burnt down, or learning an unexpected insights. AI-moderated tools can get close through videos and audio, but this firsthand experience remains much more impactful than reviewing a report that magically appears out of thin air. This is especially true if your goal is to wow a broader set of stakeholders. In an age where data is increasingly affordable and abundant, the genuine connections and experiences gained through direct human interaction gain ever more value.

In an age where data is increasingly affordable and abundant, the genuine connections and experiences gained through direct human interaction gain ever more value.

Conclusions and looking ahead

So, does this spell the end for human-led customer research? Here are my thoughts and predictions - but don’t hesitate to add your own in the comments!

For now, AI-moderated tools will supplement human-led research. They are ideal for easy-to-recruit target groups and when speed, diversity, and/or multi-lingual research are required. Traditional methods are still relevant when trust, lived experience, and reaching certain audiences are key. It will be interesting to see how they will supplement each other; I predict we’ll see a hybrid approach emerge where several human interviews might be supplemented by AI-moderated research tracks.

Enabling real-time insights for all. AI-moderated research tools dramatically lower the barrier to conducting customer research, even on smaller budgets or with limited skill levels. Together with other tools that also go in this direction (TheyDo), this trend will further decrease the cost of implementing customer-centric decision-making. Additionally, it will help shift customer research from a project-based approach to a more continuous cycle, ensuring that key insights such as Customer Journeys and personas remain current and relevant.

This is only the beginning. Based on the case studies presented on the websites of Tellet.ai and Outset.ai, the initial outcomes appear promising. Furthermore, given the swift advancements in Large Language Models (LLMs), we can anticipate a rapid improvement in the capability of these research tools.

The rise of qualitative performance indicators? As the distinction between qualitative and quantitative data blurs, qualitative insights might increasingly be recognized for their ability to inform and drive strategic goals alongside traditional KPIs. This approach enriches the measurement of progress by incorporating customer feedback, employee experiences, and other non-numeric data, thus offering a more comprehensive view of an organization's performance.

Special Credits

A special thank you goes out to Aaron Cannon (Co-founder at Outset.ai), Merik te Grotenhuis (Co-founder at Tellet.ai), and Boris Jockin (Partner & Lead Data-infused Design at Koos Service Design) for their input and letting me use their visual resources.

Read more

Kaplan, K. (2022, December 8). How much time does it take to create a journey map? Nielsen Norman Group. https://www.nngroup.com/articles/journey-map-how-much-time/

Mark Mason. (2010). Sample Size and Saturation in PhD Studies Using Qualitative Interviews. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, Vol. 11, No. 3. https://doi.org/10.17169/fqs-11.3.1428

Greg Guest, Arwen Bunce & Laura Johnson. (2006). How many interviews are enough? An experiment with data saturation and variability. Field Methods, Vol. 18, No. 1, 59-82.

TeaCup Lab (2023, October 15). How much does it cost to recruit participants for my research? https://www.teacuplab.com/blog/how-much-does-it-cost-to-recruit-participants-for-my-research/

Outset - the AI-Moderated Research platform. https://outset.ai/

Tellet - AI moderated research interviews and analysis. https://tellet.ai/